We had issues deploying changes to a large web farm a few weeks back. We had automated the startup of the 25 web servers using a DevOps tool but when it tried to bring them all up at once it took forever and the web servers got timeout errors. We resolved this by putting in huge pages on the metadata database.

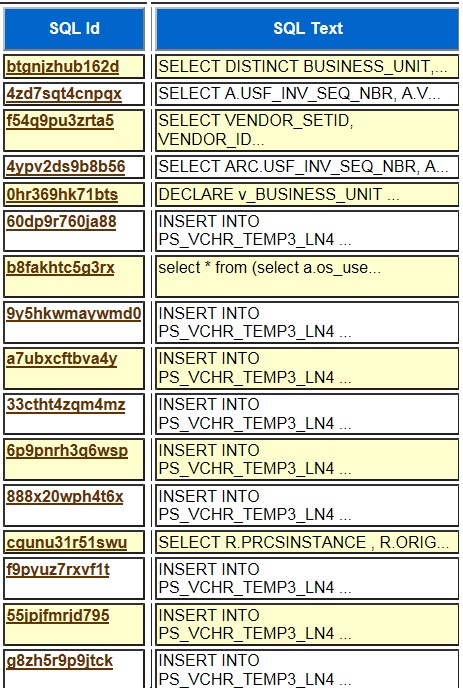

I mentioned the unexpectedly large impact of huge pages on login time in a previous post. But, we had not realized that a small metadata database with a 5 gigabyte SGA would need huge pages. I guess I should mention that this is 11.2.0.3 Oracle on Linux. The web farm has 25 servers with 25 database connections each. I think the number of connections from each server should be 5 or less but I have not convinced others to reduce the number. If you have a metadata database with 4 cores and 625 connections from 25 web servers, the web servers cannot use all of those connections. A 4-core database server can only process 10 or 20 SQL statements at once if they are I/O centric. If they are all CPU you are looking at 4 concurrent active SQL statements. If you kick off 625 SQL statements at once the load will go through the roof and everything will timeout. I thought about putting in shared servers on the metadata database to force the 625 sessions to funnel through 12 shared servers so the database server would not be overwhelmed should all 625 connections choose to run at once. But the only problem we had was with logins. All 625 were logging in at the same time when the web farm was started by our DevOps tool. Huge pages resolved this issue by making the login time 10-20 times faster.

The database was in a 16 gigabyte Linux VM with 4 cores and a 5 gigabyte SGA. Without huge pages each login took about 2.5 seconds. With it the logins took about .15 seconds. Without huge pages the load on the database server went over 100 when all 625 sessions started at once. With huge pages the load never exceeded 1. Without huge pages the web farm never came up cleanly and with it the farm came up quickly without error. So, if you have a Linux VM with several gigabytes in your SGA you might want to use huge pages.

Bobby

P.S. This may be an 11.2 bug. Ever since I noticed this behavior the dramatic time difference between with and without huge pages seemed too large to be caused by having to spin up a larger page table with 4K pages. 2.5 seconds of CPU is an eternity just to set up a page table for 5 gigabytes worth of virtual memory. I timed login on an 12.1.0.2 and 18c database with 5 gig SGA and it was around .15 seconds without huge pages. I verified that the problem exists on 11.2.0.4 as well as 11.2.0.3. I think our 11.2 systems are still on RedHat Linux 6 so it may be a combination of database and OS version.

P.P.S This is why blogging about Oracle is such a pain sometimes. It is hard to get things right without extensive testing. But then again, the stuff I said was all true. Switching to huge pages did resolve our issues. I guess as always, your mileage may vary.

P.P.P.S. See the comments below about the pre_page_sga=TRUE. The people who commented are correct. As far back as Oracle 9.2 databases with

pre_page_sga=TRUE and large SGAs can result in logins taking a couple of seconds. See this Oracle bug:

Bug 5072402 : SLOW CONNECT TIME WITH SQLPLUS TO INSTNACE WITH 22GIG SGA AND PRE_PAGE_SGA

According the Oracle, Bug 5072402 is not a bug, but intended behavior. Oracle changed this behavior in Oracle 12 according to this document:

PRE_PAGE_SGA Behaviour Change in Oracle Release 12c (Doc ID 1987975.1)

My own testing proves out the idea that pre_page_sga=TRUE causes slowness in logins 11.2 and does not cause it in 12.1.

My laptop 11.2.0.3

Slow with pre_page_sga=TRUE

real 0m1.039s

pre_page_sga boolean TRUE

sga_max_size big integer 3G

sga_target big integer 3G

Fast with pre_page_sga=FALSE

real 0m0.218s

pre_page_sga boolean FALSE

sga_max_size big integer 3G

sga_target big integer 3G

My laptop 12.1.0.2

real 0m0.084s

pre_page_sga boolean TRUE

sga_max_size big integer 3G

sga_target big integer 3G

No HugePages

PAGESIZE AVAILABLE_PAGES EXPECTED_PAGES ALLOCATED_PAGES

4K Configured 4 786436

2048K 0 1537 0

PPPPS:

I have a production HP-UX Itanium server with a 46 gig SGA and pre_page_sga=TRUE. It takes about 1 second to login. I think it is the same effect, but on Linux the 4K page size may exaggerate the effect of pre_page_sga=TRUE whereas HP-UX may handle large SGA’s better. Anyway, I learned something new. Thank you for those who participated in the comments.

I spun up a Delphix Oracle 11.2.0.3 HP-UX Itanium VDB with 32 gig SGA and pre_page_sga=TRUE and the login time was instantaneous. Odd. I guess your mileage may vary still applies.