I was in a short programming contest at work for three days last week. My team got second place! We used a LangChain vector store in a MongoDB Atlas cluster so I thought I would at least document the links we referred to and videos I watched for others who are interested.

First I watched this video about LangChain:

I recommend watching the whole thing but the part about VectorStores starts at 25:22.

I got Python 3.9 setup in an Amazon EC2 instance and ran through these steps with the FAISS database:

https://python.langchain.com/docs/modules/data_connection/vectorstores/

Then I watched this video about the new MongoDB Atlas Vector Search feature:

This video is totally worth watching. I got a ton out of it. After watching the video I redid the VectorStore example but with MongoDB Atlas as the database:

https://python.langchain.com/docs/integrations/vectorstores/mongodb_atlas

I got in a discussion with ChatGPT about why they call them “vectors” instead of “points”. A vector is just an array or list of floating point numbers. In math this could be a point in some multi-dimensional space. ChatGPT didn’t seem to realize that software does use the vectors as vectors in a math sense sometimes. The MongoDB Atlas index we used cosine similarity which must be related to the vectors with some common starting point like all zeroes pointing towards the point represented by the list of numbers in the “vector”.

When I created the search index in MongoDB Atlas I forgot to name it and it did not work since the code has the index name. For the sample the index name has to be langchain_demo. By default index name is “default”.

LangChain itself was new to me. I watched the first video all the way through but there is a lot I did not use or need. I had played with OpenAI in Python already following the Python version of this quick start:

https://platform.openai.com/docs/quickstart/build-your-application

I edited the example script and played with different things. But I had never tried LangChain which sits on top of OpenAI and simplifies and expands it.

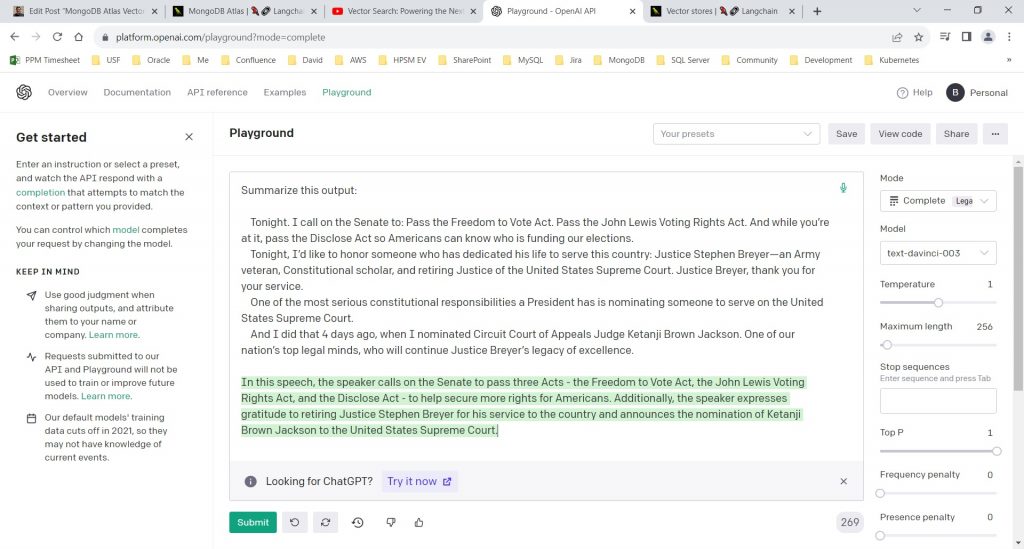

The project we worked on for the contest implemented the architecture documented at 28:57 in the MongoDB video above. If you look at the MongoDB Atlas vector store example this “information flow” would take the output from docsearch.similarity_search(query) and send it through OpenAI to summarize. If you take the piece of the President’s speech that is returned by the similarity search and past it into OpenAI’s playground the result looks like this:

So, our programming project involved pulling in documents that were split up into pieces and then retrieve a piece based on a similarity query using the vector store and then ran that piece through OpenAI to generate a readable English summary.

Pingback: Catching Myself up on AI: Useful Resources | Bobby Durrett's DBA Blog