This is a follow up to an earlier post about the 350 second timeout that is built into Amazon Web Services’ (AWS) Gateway Load Balancer (GWLB).

The earlier post was about Debezium (DBZ) using its Oracle Connector to pull data from an on-premises Oracle database into Kafka in AWS. DBZ used JDBC to connect to the Oracle database so I built a simple Java program that uses JDBC to mimic the behavior we saw in DBZ. With DBZ we were hanging if any SQL statement that DBZ ran took >= 350 seconds to run. If it did, then the Oracle session hung and Debezium never got past that SQL statement.

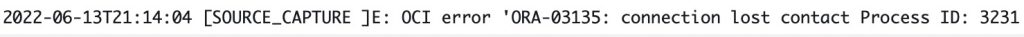

But for AWS Database Migration Service (DMS) the symptoms were different. For DMS I could not find any SQL statement that ran for >= 350 seconds. All the SQL statements ran much faster. But we did see ORA-03135 errors in DMS’s log like this:

DMS seemed to be waiting >= 350 seconds between SQL statements in certain cases, maybe doing a large load, and that seemed to be causing the ORA-03135 errors. I also saw DMS Oracle sessions waiting for more than 350 seconds on “SQL*Net message from client” idle waits. These seemed to eventually go away after 6000 or more seconds. I think that the GWLB was silently dropping the network connection, but the Oracle sessions still existed until at some point they realized that the network connection was gone. But I wanted to recreate the problem in a simple test case to prove that the 350 second GWLB timeout would throw the ORA-03135 error and leave the DMS Oracle sessions hanging for several thousand seconds in the SQL*Net wait that I was seeing in our production DMS sessions.

To recreate this error and the orphaned session behavior and to show that it was due to the GWLB 350 second timeout and not some other weird network problem I did some simple tests with SQL*Plus and Instant Client. I installed these on an AWS EC2 Linux machine that already had the firewall and security group configuration setup to allow a connection from the EC2 to an on-premises Oracle database. Then I just logged into that database and sat idle for different lengths of time before running a select statement. I narrowed it down to about 350 seconds as the cutoff point where the session is lost due to too much idle time.

Here is my test with < 350 second wait:

SQL> connect myuser/mypassword@mydatabase

Connected.

SQL> host sleep 348

SQL> select * from dual;

D

-

X

Elapsed: 00:00:00.11Here is my test with > 350 seconds wait:

SQL> connect myuser/mypassword@mydatabase

Connected.

SQL> host sleep 351

SQL> select * from dual;

select * from dual

*

ERROR at line 1:

ORA-03135: connection lost contact

Process ID: 1208

Session ID: 57 Serial number: 21111Narrowing it down to 350 seconds at the cutoff showed that just logging in and waiting for > 350 seconds causes an ORA-03135 error. I also verified that the associated Oracle sessions hung around for > 350 seconds stuck on the “SQL*Net message from client” wait. Sure, DMS could be throwing a ORA-03135 error due to some unrelated network problem, but my SQL*Plus test proved that any Oracle connection from our AWS environment back to our on-premises Oracle databases will throw a ORA-03135 error and leave orphaned Oracle sessions if it sits idle for >= 350 seconds unless we put the fix in place that I mentioned in my earlier post.

The fix is to set the Linux parameter net.ipv4.tcp_keepalive_time to < 350 seconds and to use (ENABLE=BROKEN) in your connection strings. Once I put these in place for my SQL*Plus test I could wait longer than 350 seconds and then run a select statement with no errors.

Since March when we noticed this timeout with Debezium I have suspected the timeout would also affect DMS, but I did not know that the symptoms would be throwing ORA-03135 errors and leaving orphaned sessions when the time idle between SQL statements exceeded the timeout. It took a few tickets working with AWS support but last week they put net.ipv4.tcp_keepalive_time < 350 seconds and (ENABLE=BROKEN) in their global DMS configuration for all their customers.

So, from now on anyone setting up a new DMS replication instance version 3.4.5 or later should be able to replicate data from AWS to an on-premises Oracle database through Amazon’s Gateway Load Balancer without facing these ORA-03135 errors. If you created your replication instance before last week you should create a new one >= version 3.4.5 to take advantage of this fix, especially if you are seeing ORA-03135 errors in your logs.

Bobby

Excellent sleuthing! As an OCI and Azure person I didn’t know what “DMS” was until I clicked the link on “DMS replication instance” near the end so thanks for including that.

Thanks for your comment Rich. It is funny that I linked and defined a bunch of other terms but not the main one, DMS. I’ve tweaked the post to link to a page about DMS earlier in the text. It is great getting a little feedback from others because they see things that I gloss right over..

Bobby